K Nearest Neighbors Example

K nearest neighbors has lots of applications.

K nearest neighbors example. Classify the people that can be potential voter to one party or another in order to predict politics. The simplest knn implementation is in the class library and uses the knn function. It s easy to implement and understand but has a major drawback of becoming significantly slows as the size of that data in use grows.

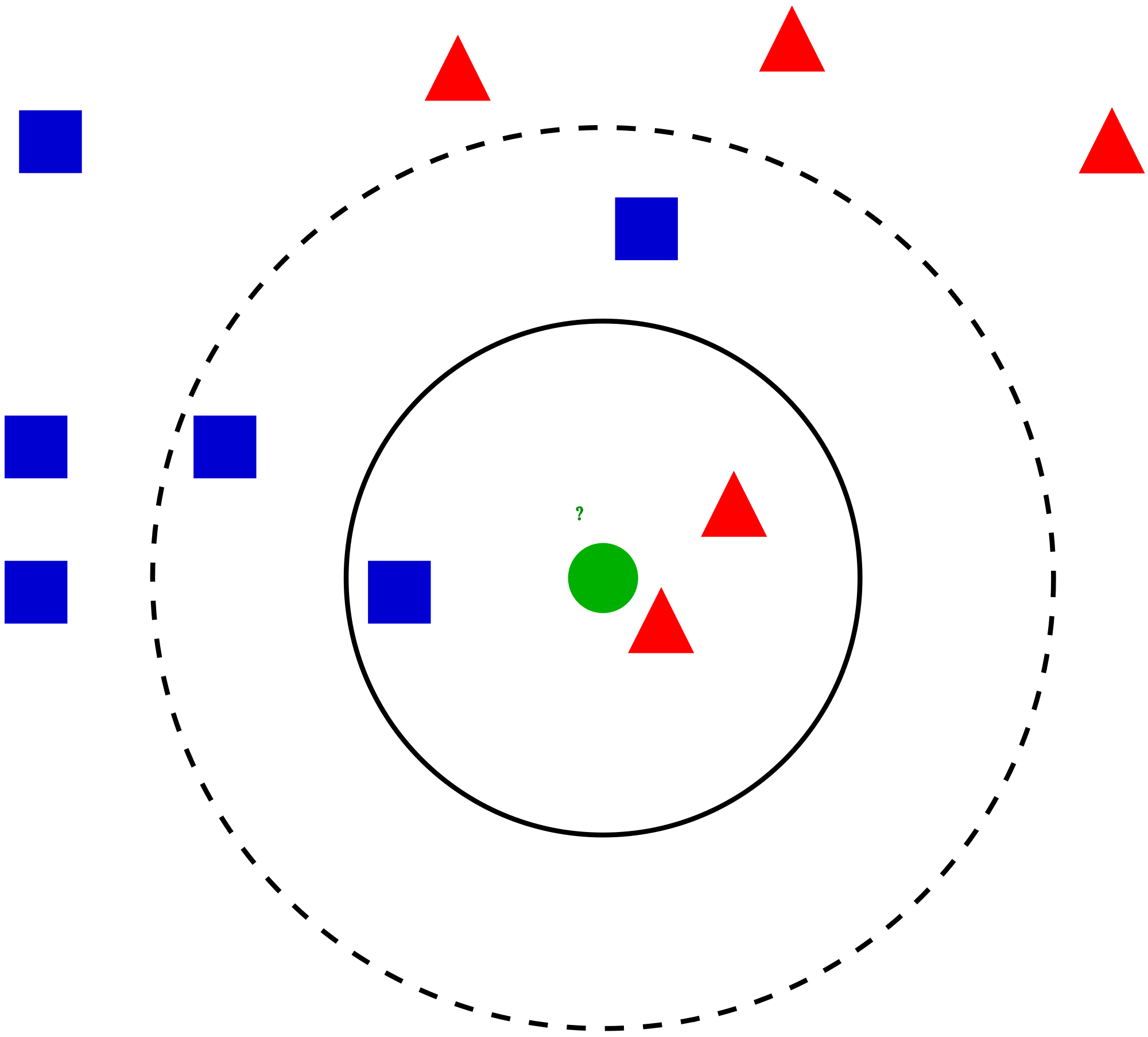

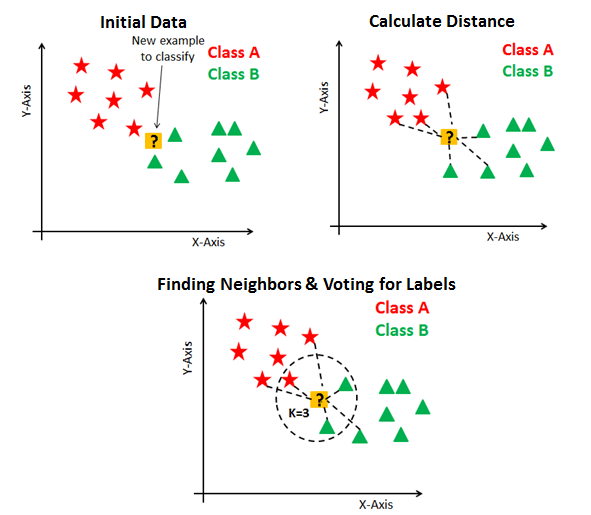

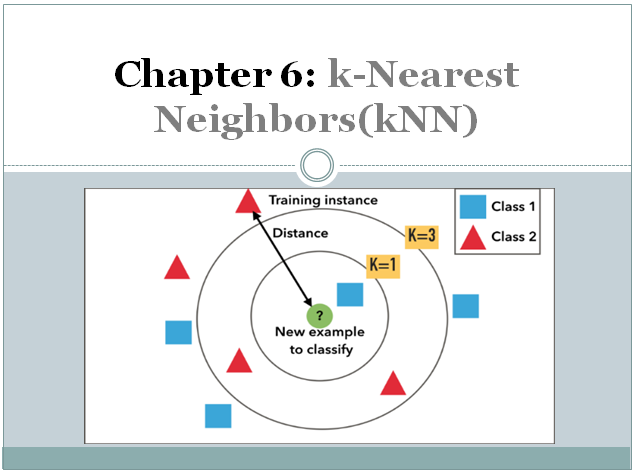

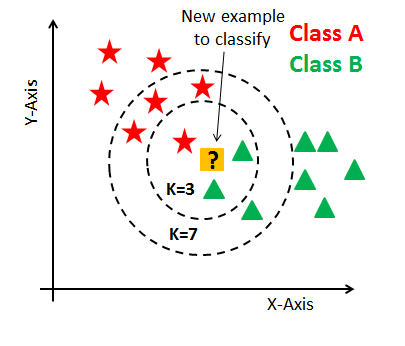

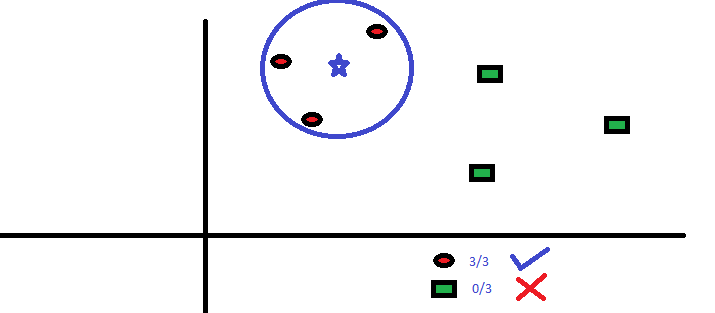

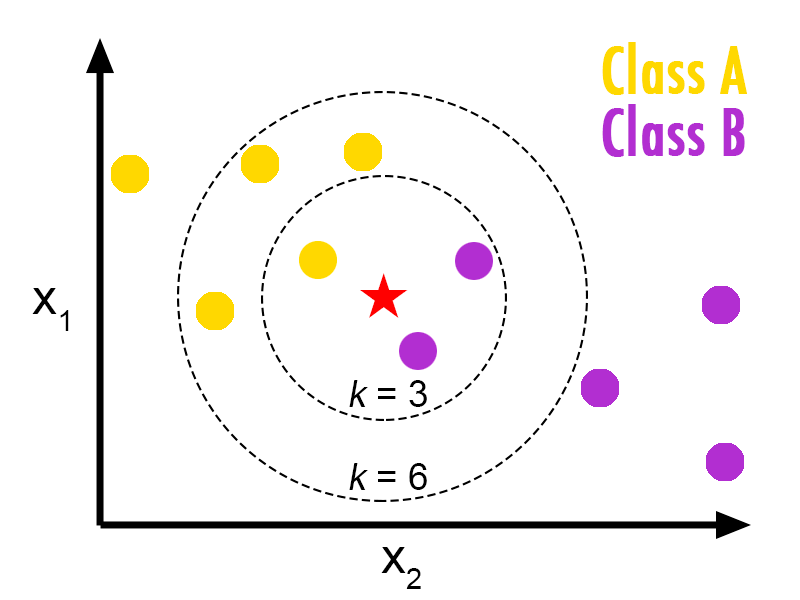

In both cases the input consists of the k closest training examples in the feature space. Knn works by finding the distances between a query and all the examples in the data selecting. Determine parameter k number of nearest neighbors calculate the distance between the query instance and all the training samples sort the distance and determine nearest neighbors based on the k th minimum distance.

Let s take below wine example. Most similar to monica in terms of attributes and see what categories those 5 customers were in. Numerical exampe of k nearest neighbor algorithm.

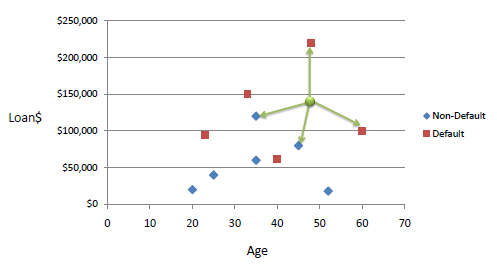

Collect financial characteristics to compare people with similar financial features to a database in order to do credit ratings. May 12 4 min read. In pattern recognition the k nearest neighbors algorithm is a non parametric method proposed by thomas cover used for classification and regression.

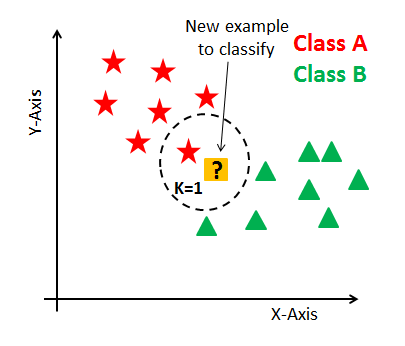

An object is classified by a plurality vote of its neighbors with the object being assigned to the class most common among. K nn algorithm assumes the similarity between the new case data and available cases and put the new case into the category that is most similar to the available categories. K nearest neighbour is a simple algorithm that stores all the available cases and classifies the new data or case based on a similarity measure.

K nearest neighbor explanation with example. Two chemical components called rutime and myricetin. Number of neighbors to use by default for kneighbors queries.

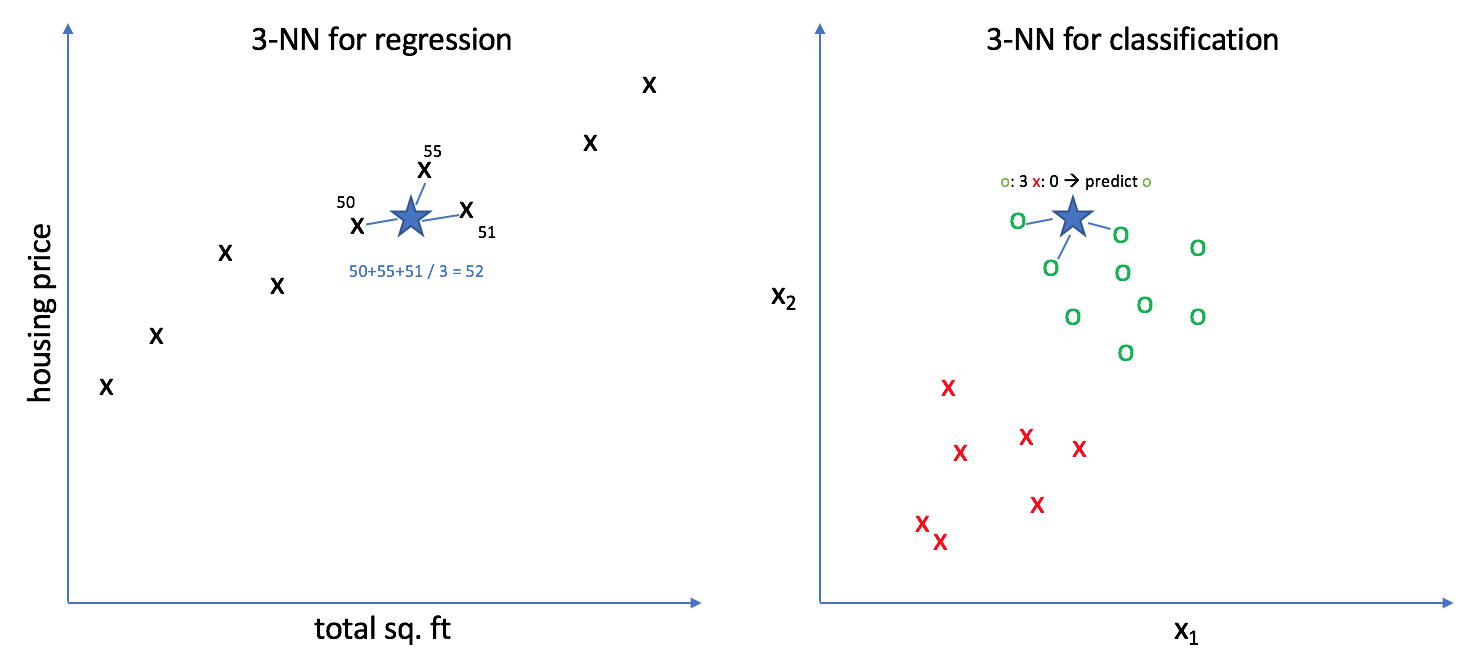

The output depends on whether k nn is used for classification or regression. Weights uniform distance or callable default uniform weight function used in prediction. A few examples can be.

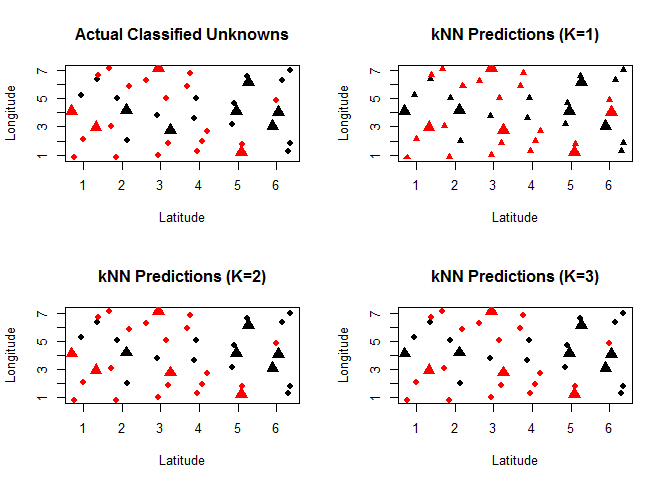

Read more in the user guide. Classifier implementing the k nearest neighbors vote. Using the k nearest neighbors we can classify the test objects.

See the calculation shown in the snapshot below. K nearest neighbour is one of the simplest machine learning algorithms based on supervised learning technique. They have tested and where then fall on that graph based on how much rutine and.

Parameters n neighbors int default 5. Then the algorithm searches for the 5 customers closest to monica i e. The k nearest neighbors knn algorithm is a simple supervised machine learning algorithm that can be used to solve both classification and regression problems.

It is mostly used to classifies a data point based on how its neighbours are classified. Find k nearest neighbors let k be 5. Here is step by step on how to compute k nearest neighbors knn algorithm.

K nearest neighbors in r example knn calculates the distance between a test object and all training objects. If 4 of them had medium t shirt sizes and 1 had large t shirt size then your best guess for monica is medium t shirt. Consider a measurement of rutine vs myricetin level with two data points red and white wines.

The k nearest neighbor is the algorithm used for classification.